Fancy a phone case made of artificial skin?

Researchers from Bristol and Paris are looking to take touch technology to the next level, developing an artificial skin-like membrane for augmenting interactive devices such as phones, wearables and computers.

The Skin-On interface, developed by the University of Bristol in partnership with Télécom ParisTech and Sorbonne University, mimics human skin not only in appearance but also in sensing resolution. The team’s study was presented at the 32nd ACM Symposium on User Interface Software and Technology (UIST 2019), held in New Orleans in mid-October.

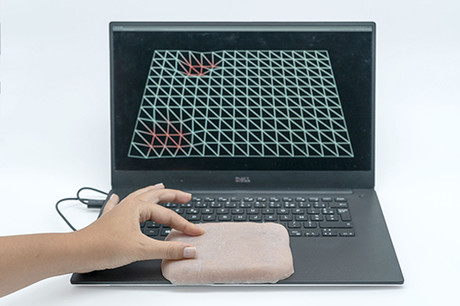

The researchers adopted a bio-driven approach to developing a multilayer, silicone membrane that mimics the layers present in human skin. This is made up of a surface textured layer, an electrode layer of conductive threads and a hypodermis layer.

Not only is the interface more natural than a rigid casing, it can also detect a plethora of gestures made by the end users. As a result, the artificial skin allows devices to ‘feel’ the user’s grasp, including pressure and location, and can detect interactions such as tickling, caressing, even twisting and pinching.

“This is the first time we have the opportunity to add skin to our interactive devices,” said Dr Anne Roudaut from the University of Bristol, who supervised the research. “The idea is perhaps a bit surprising, but skin is an interface we are highly familiar with so why not use it and its richness with the devices we use every day?”

“Artificial skin has been widely studied in the field of robotics but with a focus on safety, sensing or cosmetic aims,” added lead author Marc Teyssier, from Télécom ParisTech. “This is the first research we are aware of that looks at exploiting realistic artificial skin as a new input method for augmenting devices.”

The researchers created a phone case, computer touch pad and smart watch to demonstrate how touch gestures on the Skin-On interface can convey expressive messages for computer-mediated communication with humans or virtual characters. As explained by Teyssier, “We implemented a messaging application where users can express rich tactile emotions on the artificial skin. The intensity of the touch controls the size of the emojis. A strong grip conveys anger while tickling the skin displays a laughing emoji and tapping creates a surprised emoji.”

The paper offers all the steps needed to replicate this research, and the authors are inviting developers with an interest in Skin-On interfaces to get in touch. The researchers say the next step will be making the skin even more realistic, and have already started looking at embedding hair and temperature features which could be enough to give devices goosebumps.

“This work explores the intersection between man and machine,” said Dr Roudaut. “We have seen many works trying to augment human with parts of machines — here we look at the other way around and try to make the devices we use every day more like us, ie, human-like.”

Please follow us and share on Twitter and Facebook. You can also subscribe for FREE to our weekly newsletter and bimonthly magazine.

Making sensors more sustainable with a greener power source

A new project aims to eliminate the reliance of sensors on disposable batteries by testing the...

Fission chips — using vinegar for sensor processing

Researchers have developed a new way to produce ultraviolet (UV) light sensors, which could lead...

Self-assembling sensors could improve wearable devices

Researchers from Penn State University have developed a 3D-printed material that self-assembles...