Deep learning enables more accurate motion tracking

Researchers from Tohoku University have developed a new sensing method that makes tracking movement easier and more efficient, in which deep learning and a structure-aware temporal bilateral filter are used to capture dexterous 3D motion data from a flexible magnetic flux sensor array. Their method has been described in the journal IEEE Xplore.

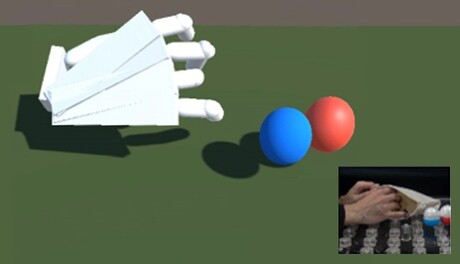

Dexterous 3D motion data can be used for multiple purposes: biologists can use the data to record detailed movements of small animals in their living environments, scientists can track the flow of fluids and researchers can track finger movements and objects being manipulated by users in virtual reality. But while optical cameras are the most prominent method of tracking movements, they struggle with accuracy and reliability; so if a small animal burrows away or if fingers or objects obscure the view, the camera will fail to detect the motion. Magnetic tracking technology is also used for dexterous motion, but the classic tracking method creates bias and magnetic sources have a dead-angle problem or bulky markers.

The research team invented their new method by applying a deep neural network and a novel structure-aware temporal bilateral filter on a new magnetic tracking principle. First, the neural networks learn the regression from the simulation flux values to the LC coils 3D configuration at any location and orientation. The new filter further compensates the data to reconstruct smooth and accurate motion. Markers do not require batteries, so observation time can be maximised.

As a result, the new integrated system can track multiple LC coils at 100 Hz speed at millimetre-level accuracy. Tracking loss due to dead-angle can be reconstructed because of the system’s self-learning.

“We can now track complex motions with higher accuracy,” said Yoshifumi Kitamura, co-author of the study.

“The application of our research is widespread. Hand motions can be tracked to make creating smooth animations easier, markers can be put into fluids to track its flow and tracking can be placed on small animals.”

Please follow us and share on Twitter and Facebook. You can also subscribe for FREE to our weekly newsletter and bimonthly magazine.

Novel biosensor increases element extraction efficiency

Biologists have developed a novel biosensor that can detect rare earth elements and could be...

Making sensors more sustainable with a greener power source

A new project aims to eliminate the reliance of sensors on disposable batteries by testing the...

Fission chips — using vinegar for sensor processing

Researchers have developed a new way to produce ultraviolet (UV) light sensors, which could lead...