Intelligent sensing abilities make robots smarter

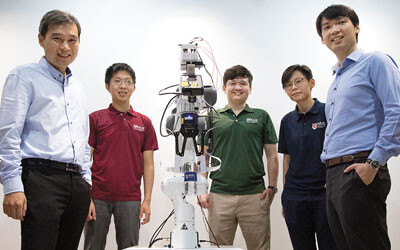

Computer scientists and materials engineers from the National University of Singapore (NUS) have developed a way to equip robots with an exceptional sense of touch, as well as the ability to process sensory information quickly and intelligently.

Picking up a can of soft drink may be a simple task for humans, but this is a complex task for robots — it has to locate the object, deduce its shape, determine the right amount of strength to use and grasp the object without letting it slip. Most of today’s robots operate solely based on visual processing, which limits their capabilities.

Seeking to make robots smarter, NUS researchers developed a sensory integrated artificial brain system that mimics biological neural networks and can run on a power-efficient neuromorphic processor, such as Intel’s Loihi chip. This novel system integrates artificial skin and vision sensors, equipping robots with the ability to draw accurate conclusions about the objects they are grasping based on the data captured by the vision and touch sensors in real time.

“The field of robotic manipulation has made great progress in recent years; however, fusing both vision and tactile information to provide a highly precise response in milliseconds remains a technology challenge,” said Assistant Professor Benjamin Tee, who is co-leading the NUS project with Assistant Professor Harold Soh. “Our recent work combines our ultrafast electronic skins and nervous systems with the latest innovations in vision sensing and AI for robots so that they can become smarter and more intuitive in physical interactions.”

In the new robotic system, the NUS team applied an advanced artificial skin known as Asynchronous Coded Electronic Skin (ACES), developed by Asst Prof Tee and his team in 2019. This novel sensor detects touches more than 1000 times faster than the human sensory nervous system. It can also identify the shape, texture and hardness of objects 10 times faster than the blink of an eye.

“Making an ultrafast artificial skin sensor solves about half the puzzle of making robots smarter,” Asst Prof Tee said. “They also need an artificial brain that can ultimately achieve perception and learning as another critical piece in the puzzle.”

To break new ground in robotic perception, the NUS team explored neuromorphic technology — an area of computing that emulates the neural structure and operation of the human brain — to process sensory data from the artificial skin. As Asst Prof Tee and Asst Prof Soh are members of the Intel Neuromorphic Research Community (INRC), it was a natural choice to use Intel’s Loihi neuromorphic research chip for their new robotic system.

In their initial experiments, the researchers fitted a robotic hand with the artificial skin and used it to read Braille, passing the tactile data to Loihi via the cloud to convert the micro bumps felt by the hand into a semantic meaning. Loihi achieved over 92% accuracy in classifying the Braille letters, while using 20 times less power than a normal microprocessor.

Asst Prof Soh’s team improved the robot’s perception capabilities by combining both vision and touch data in a spiking neural network. In their experiments, the researchers tasked a robot equipped with both artificial skin and vision sensors to classify various opaque containers containing differing amounts of liquid. They also tested the system’s ability to identify rotational slip, which is important for stable grasping.

In both tests, the spiking neural network that used both vision and touch data was able to classify objects and detect object slippage. The classification was 10% more accurate than a system that used only vision. Moreover, using a technique developed by Asst Prof Soh’s team, the neural networks could classify the sensory data while it was being accumulated, unlike the conventional approach where data is classified after it has been fully gathered. In addition, the researchers demonstrated the efficiency of neuromorphic technology: Loihi processed the sensory data 21% faster than a top performing graphics processing unit (GPU), while using more than 45 times less power.

“We’re excited by these results,” Asst Prof Soh said. “They show that a neuromorphic system is a promising piece of the puzzle for combining multiple sensors to improve robot perception. It’s a step towards building power-efficient and trustworthy robots that can respond quickly and appropriately in unexpected situations.”

The team’s research findings were presented at the 2020 Robotics: Science and Systems virtual conference. Moving forward, Asst Prof Tee and Asst Prof Soh plan to further develop their novel robotic system for applications in the logistics and food manufacturing industries, where there is a high demand for robotic automation — especially moving forward in the post-COVID era.

Please follow us and share on Twitter and Facebook. You can also subscribe for FREE to our weekly newsletter and bimonthly magazine.

Fingertip bandage brings texture to touchscreens

Researchers have developed a haptic device that enables wearers to feel virtual textures and...

Sweat sensor sticker monitors vitamin C in real time

Researchers have developed a battery-free electronic sticker designed to monitor vitamin C levels...

Flexible optical sensor detects pressure and location

Researchers have developed a multi-channel optical sensor that is capable of detecting both the...