AI system for precise recognition of hand gestures

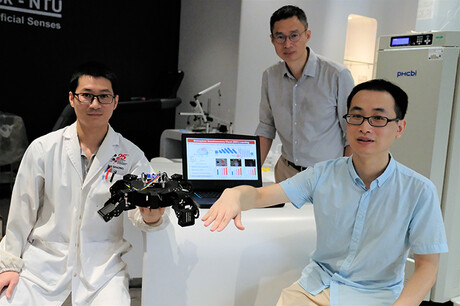

Scientists from Nanyang Technological University, Singapore (NTU) and the University of Technology Sydney (UTS) have developed an artificial intelligence system that recognises hand gestures by combining skin-like electronics with computer vision.

The recognition of human hand gestures by AI systems has been a valuable development over the last decade and has been adopted in high-precision surgical robots, health monitoring equipment and gaming systems. AI gesture recognition systems that were initially visual-only have been improved upon by integrating inputs from wearable sensors that re-create the skin’s sensing (or somatosensory) ability — an approach known as ‘data fusion’.

However, gesture recognition precision is still hampered by the low quality of data arriving from wearable sensors, typically due to their bulkiness and poor contact with the user, and the effects of visually blocked objects and poor lighting. Further challenges arise from the integration of visual and sensory data as they represent mismatched datasets that must be processed separately and then merged at the end, which is inefficient and leads to slower response times.

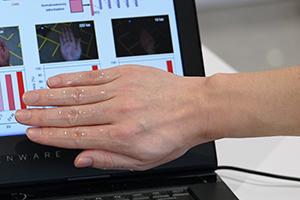

To tackle these challenges, the NTU–UTS team created a data fusion system that uses skin-like stretchable strain sensors, made from single-walled carbon nanotubes, and an AI approach that resembles the way that the skin senses and vision are handled together in the brain. Their work has been described in the journal Nature Electronics.

The scientists developed their bioinspired AI system by combining three neural network approaches in one system: a convolutional neural network, which is a machine learning method for early visual processing; a multilayer neural network for early somatosensory information processing; and a sparse neural network to fuse the visual and somatosensory information together. The result is a system that is claimed to recognise human gestures more accurately and efficiently than existing methods.

“Our data fusion architecture has its own unique bioinspired features which includes a manmade system resembling the somatosensory-visual fusion hierarchy in the brain,” said NTU’s Professor Chen Xiaodong, lead author of the study. “We believe such features make our architecture unique to existing approaches.

“Compared to rigid wearable sensors that do not form an intimate enough contact with the user for accurate data collection, our innovation uses stretchable strain sensors that comfortably attach onto the human skin. This allows for high-quality signal acquisition, which is vital to high-precision recognition tasks.”

As a proof of concept, the team tested their bioinspired AI system using a robot controlled through hand gestures and guided it through a maze. Results showed that hand gesture recognition powered by the bioinspired AI system was able to guide the robot through the maze with zero errors, compared to six recognition errors made by a visual-based recognition system.

High accuracy was also maintained when the new AI system was tested under poor conditions including noise and unfavourable lighting. The AI system worked effectively in the dark, achieving a recognition accuracy of over 96.7%.

“The secret behind the high accuracy in our architecture lies in the fact that the visual and somatosensory information can interact and complement each other at an early stage before carrying out complex interpretation,” said NTU’s Dr Wang Ming, first author of the study. “As a result, the system can rationally collect coherent information with less redundant data and less perceptual ambiguity, resulting in better accuracy.”

The research team is now looking to build a VR and AR system for use in areas where high-precision recognition and control are desired, such as entertainment technologies and rehabilitation in the home.

Please follow us and share on Twitter and Facebook. You can also subscribe for FREE to our weekly newsletter and bimonthly magazine.

Optical fibre sensor detects arsenic in drinking water

An innovative new optical sensor has been designed to provide real-time detection of extremely...

New haptic patch transmits complexity of touch to the skin

The device, developed by researchers at Northwestern University, delivers various sensations,...

Stretchy gel sensor detects solid-state skin biomarkers

Researchers have developed a wearable, stretchable, hydrogel-based sensor that detects biomarkers...